On March 13, 2024, the European Parliament approved the Artificial Intelligence (AI) Act. This law is the first-ever comprehensive legal framework on AI and will likely be a model around the world. The AI Act takes a "risk-based" approach to various products and services utilizing AI, applying different levels of scrutiny and requirements depending on the risk classification.

Key takeaways from the EU AI Act:

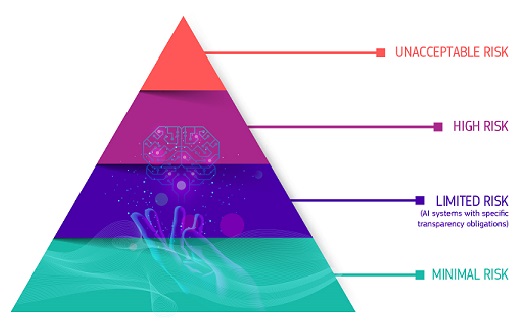

The AI Act categorizes AI systems and models into 4 categories:

-

- “Minimal Risk,” i.e., AI systems that present minimal risk for individuals’ rights and safety. These systems are subject to certain transparency and identification requirements, such as requiring that users are made aware that they are interacting with an AI system.

- “Limited Risk,” i.e., AI systems that perform generally applicable functions such as image and speech recognition, translation, and content generation. These systems and models must adhere to certain transparency requirements, including compliance with copyright law and publishing summaries of the content used for training the AI system. Further, systems and models that pose systemic risks are subject to more stringent requirements, such as model evaluations, risk assessments, and testing and reporting requirements.

- “High Risk,” i.e., AI systems such as those used in healthcare or infrastructure, are subject to stricter requirements, including technical documentation, data governance, human oversight, security, conformity assessments, and reporting obligations.

- “Unacceptable Risk,” i.e., those that present a clear threat to the safety, livelihoods and rights of people will be banned (with narrow exceptions for law enforcement).

Enforcement of these regulations will be coordinated through an “AI Office” within the European Commission, which will work with member countries on governance and enforcement. Penalties for violations are significant and include fines of up to 7% of global annual turnover (or EUR 35 million) for prohibited AI violations or up to 3% of global annual turnover (or EUR 15 million) for other breaches.

The Regulatory Framework defines 4 levels of risk for AI systems

(Source: European Commission)

Who this affects:

-

- The AI Act will affect EU businesses as well as non-EU businesses that provide, develop or use AI systems or models within the EU.

What’s next?

-

- The AI Act still needs to be "implemented" by the EU member states, which seems likely to happen by May 2024. There are phased implementation obligations that will start 6 months after implementation and continue for up to 3 years after.

- While there is time before these requirements are fully in effect, now is a great time for companies that are currently, or are planning to develop, market or use AI tools to start putting processes in place that will facilitate compliance with the AI Act. Some of these practices include conducting an AI audit, developing a risk management strategy, appointing stakeholders within the organization to manage these tasks as things unfold, invest in training and awareness and generally stay informed. These actions will also help position companies better as other legislation comes into effect (spoiler alert, Nevada is already there and California is likely to soon follow).

To learn more, click to read a summary of the AI Act published by the European Commission.

For inquiries or to learn more about our AI legal services at Foster Garvey, please reach out to Erin Snodgrass, Carrie Lofts, or Claire Hawkins.